LLM-Powered AI Assistants

Next-Generation Conversational AI Powered by Large Language Models

TL;DR: LLM-Powered AI Assistant Development

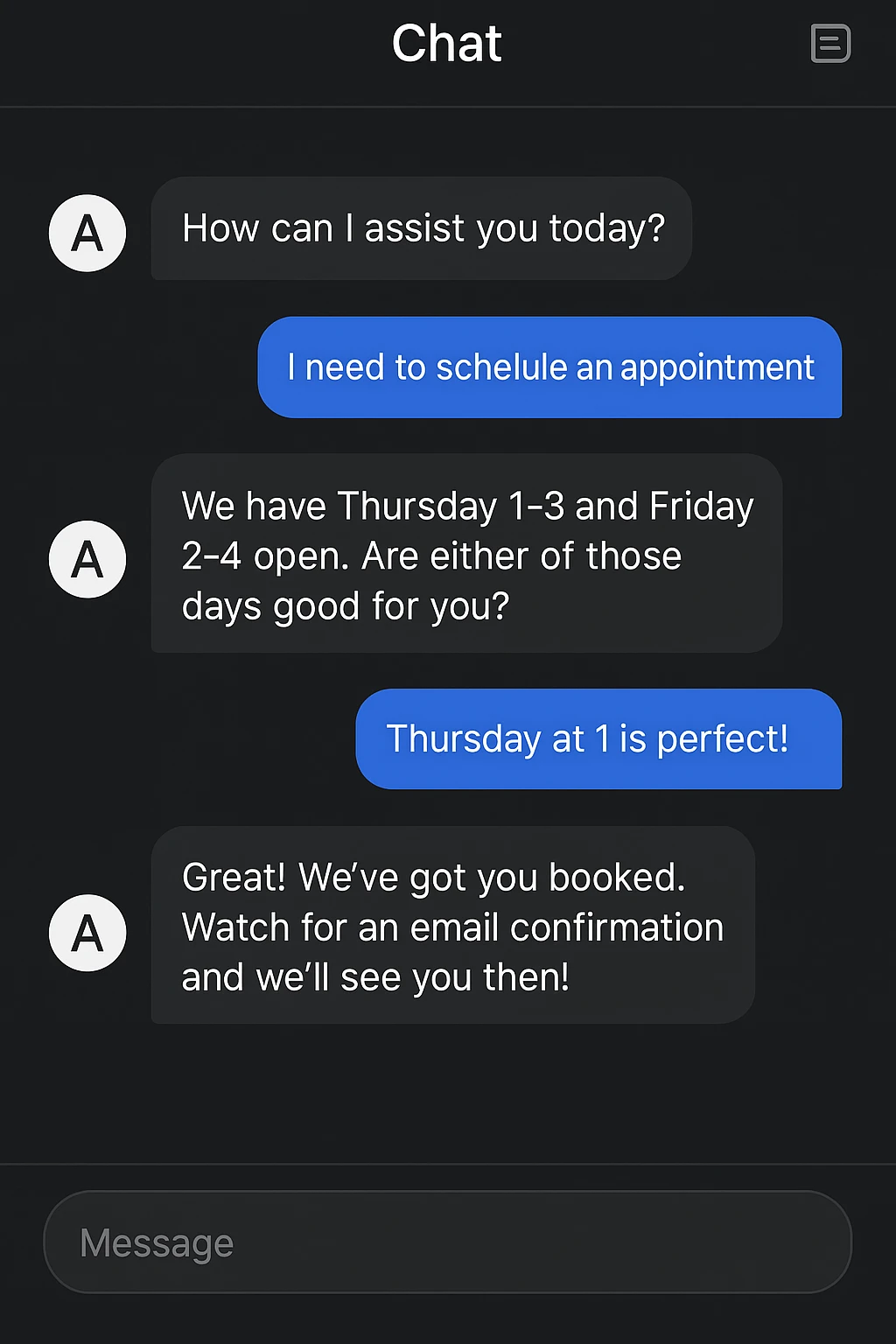

Hyperfly specializes in developing sophisticated AI Assistants powered by Large Language Models (LLMs) like GPT-4 and Claude. Unlike traditional chatbots, these advanced systems understand context, learn from interactions, and generate human-like responses to complex queries. Our LLM-powered solutions can handle nuanced conversations, access your business knowledge, generate creative content, and solve multifaceted problems. Whether you need a customer service assistant with deep product knowledge, a sales representative that understands complex offerings, or an internal knowledge management system, our LLM-powered assistants deliver transformative results through cutting-edge generative AI technology.

What are LLM-Powered AI Assistants?

LLM-powered AI Assistants represent the cutting edge of conversational artificial intelligence, built on breakthrough Large Language Models (LLMs) like GPT-4, Claude, or similar foundation models. These sophisticated systems go far beyond traditional chatbots by leveraging neural networks with billions of parameters trained on vast datasets of text and code. This advanced architecture enables them to understand complex language nuances, maintain context across lengthy conversations, reason through problems, generate creative content, and integrate seamlessly with your business systems to deliver truly intelligent interactions.

At Hyperfly Developers, we harness the power of cutting-edge Large Laguage Models (LLMs) to create AI Assistants that transform how businesses interact with their customers and employees. Our LLM-powered solutions leverage neural networks with billions of parameters to understand complex queries, maintain context across conversations, and generate nuanced, helpful responses that feel remarkably human.

We implement sophisticated LLM-powered assistants for diverse applications including customer support with deep product knowledge, sales representatives that understand complex offerings, knowledge workers that synthesize information from diverse sources, creative assistants that generate content, and internal tools that make your organization's collective knowledge instantly accessible. Each solution is custom-engineered with the optimal foundation model, fine-tuned on your specific domain, and enhanced with retrieval-augmented generation to incorporate your proprietary information.

Our development approach leverages prompt engineering, vector databases, semantic search, and context management to overcome the limitations of foundation models while maximizing their strengths. We implement comprehensive safety guardrails, fact-checking mechanisms, and continuous human feedback loops to ensure reliability, accuracy, and alignment with your brand values. The result is a system that combines the breadth of knowledge from Large Language Models with the precision and security required for enterprise applications.

With our LLM-powered AI Assistants, you can automate complex workflows, provide consistent 24/7 support with human-like understanding, unlock insights from unstructured data, and deliver personalized experiences at scale. Whether you're looking to revolutionize customer interactions, augment employee capabilities, or streamline knowledge-intensive processes, our cutting-edge LLM solutions help you harness the transformative potential of generative AI safely and effectively.

Frequently Asked Questions

LLM-powered AI Assistants are advanced conversational interfaces built on Large Language Models (LLMs) like GPT-4, Claude, or similar foundation models. Unlike traditional rule-based chatbots, these assistants work by:

- Leveraging massive pre-trained language models that understand context, nuance, and intent

- Processing natural language queries through sophisticated transformer-based architectures

- Generating human-like responses based on billions of parameters and training data

- Connecting to knowledge bases and business systems through specialized integrations

- Continuously improving through fine-tuning and reinforcement learning

Modern LLM-powered assistants can maintain long, coherent conversations, understand complex queries, remember user preferences across interactions, and perform sophisticated tasks like drafting content, analyzing data, or executing multi-step processes on behalf of users.

LLM-powered AI Assistants represent a quantum leap beyond traditional chatbots in several key ways:

- Natural language understanding: LLMs comprehend complex queries, slang, and even poorly worded questions that would confuse rule-based systems

- Contextual awareness: They maintain context throughout lengthy conversations without losing track of the discussion

- Knowledge breadth: LLMs draw on vast knowledge bases, allowing them to address a wide range of topics without explicit programming

- Generative capabilities: They can create original content, summaries, and creative solutions rather than just selecting pre-written responses

- Reasoning abilities: They can follow multi-step reasoning processes, make inferences, and draw conclusions

- Adaptability: They can adjust their tone, style, and approach based on the interaction context

- Continuous improvement: They get better over time through techniques like reinforcement learning from human feedback

These capabilities enable LLM-powered assistants to handle far more complex tasks and provide significantly more valuable interactions than traditional chatbots ever could.

At Hyperfly, our approach to developing LLM-powered AI Assistants follows these key principles:

- LLM selection: We identify the optimal foundation model (GPT-4, Claude, etc.) based on your specific requirements and use case

- Knowledge integration: We connect your business data, documentation, and domain knowledge to the LLM through retrieval-augmented generation (RAG)

- Custom fine-tuning: We train the model on your specific industry terminology, brand voice, and customer interaction patterns

- System design: We architect the entire solution including prompt engineering, context management, and integration frameworks

- Guardrails implementation: We establish content filters, fact-checking mechanisms, and ethical boundaries to ensure responsible AI use

- Multi-modal capabilities: When appropriate, we integrate image, audio, or video processing capabilities alongside text

- Deployment and monitoring: We implement the solution with comprehensive analytics, performance monitoring, and continuous improvement processes

- Feedback loops: We establish mechanisms to collect user interactions and refine the model through reinforcement learning

Throughout this process, we emphasize creating truly intelligent conversational experiences that deliver tangible business value. Our goal is to develop AI Assistants that not only respond accurately but also provide insights, solve complex problems, and create delightful user experiences that strengthen your customer relationships.